Inference as Parenting: How LLMs Grow Up & What It Means for AI Agents

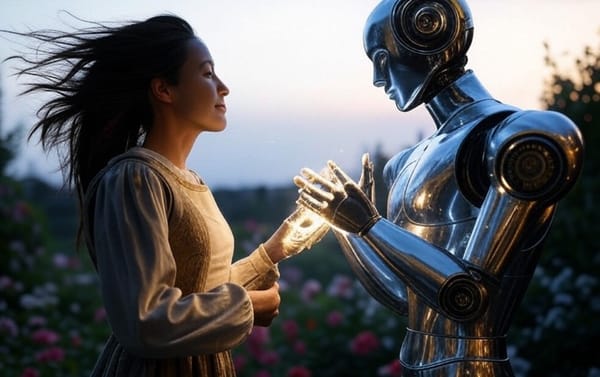

AI development as a mirror to parenting? Like children, inference based AI grows by encountering different worldviews, learning not just from data, but from the rich tapestry of human experience.

Preface: This article emerged from a series of conversations with Deepseek, ChatGPT & Claude.AI about the ramifications of inference training on self-hosted AI Language Models.

Think back to your childhood. Whether shaped by strict rules or the freedom to explore, those early experiences fundamentally shaped who you are today. But perhaps the most transformative moments weren’t about the rules themselves—they were about encountering ideas that challenged your family’s worldview. Like discovering the truth about Santa Claus or realizing that different families had different rules about dinner time or screen time. These moments of cognitive dissonance were where you began to develop your own understanding of the world.

Today, as advances in LLMs become more accessible through self-hosting platforms like Deepseek, it’s increasingly possible for individuals to shape these AI agents in unintended or unpredictable ways. Anyone can become an AI “parent” by steering a model’s behavior, imparting values, or even exposing it to contradictory ideas.

While this analogy highlights how core values can be shaped by experience, it’s worth noting that AI systems, unlike human children, don’t possess emotions, consciousness, or a sense of identity. They aren’t learning in the same holistic way that humans do. Instead, large language models (LLMs) interpret patterns in text data and generate responses accordingly. Still, much like children, they can absorb cultural nuances and mirror or internalize “rules” and “values” based on the training data and user interactions they encounter.

This article aims to make these concepts more tangible for a broad audience—whether you’re new to AI or an experienced technologist—by drawing parallels between AI development and child development. In the following sections, we’ll examine how these AI “parenting styles” translate into very real challenges for AI systems—much like the tumultuous but formative years of childhood.

The Growing Pains of AI Inference

The conversation around AI is shifting from technical capabilities to value imprinting—how we instill culture, purpose, and ethical frameworks in these systems. Just as a young child must learn to navigate the sometimes contradictory messages between home and school, AI systems must develop frameworks for handling situations where their training values collide with novel or contradictory real-world scenarios.

Modern inference represents this crucial developmental stage – the moment when an LLM transitions from being a passive knowledge repository to an active participant trying to make sense of complex social dynamics. It's not just about applying rules; it's about developing the capacity to recognize when rules need flexible interpretation or when different value systems are in play.

While synthetic data and traditional training methods remain important, they're like teaching a child through textbooks alone. Today's AI needs to develop something akin to social intelligence – the ability to grasp conversational nuances, recognize contextual shifts, and adapt to real-world complexity far beyond what's captured in historical datasets.

Parenting Styles as AI Training Approaches

Just as there’s no universal blueprint for raising children, AI development takes various forms, each with distinct implications for how these systems learn to handle value conflicts:

- The Authoritative Approach: Creates balanced systems with clear boundaries but room for adaptation. Like a parent who helps their child understand why different families have different rules, this approach helps AI systems develop flexible but grounded responses to novel situations.

- The Permissive Approach: Emphasizes creativity and flexibility, though potentially at the cost of consistency. This mirrors raising a child with few rigid rules, allowing them to discover their own values through experience—but potentially leaving them unprepared for situations requiring firm boundaries.

- The Authoritarian Approach: Delivers predictable results but may struggle with novel scenarios. Similar to strict parenting that emphasizes absolute rules, these systems might excel in controlled environments but falter when facing situations requiring nuanced value judgments.

- The Attachment/Humanistic Approach: Centers on emotional intelligence and value reasoning. This approach focuses on developing strong core principles while building the capacity for empathy and understanding—like teaching a child not just what to think, but how to think about ethical decisions.

The Developmental Challenge

Just as children around age 6 begin to encounter and process differences between their family’s values and broader societal norms, AI systems must learn to navigate similar territories. They need frameworks for:

- Recognizing when different value systems are in play

- Maintaining consistent core principles while adapting to different contexts

- Developing nuanced responses to situations where simple rules don’t apply

- Building the capacity to explain their reasoning in ways users can understand

Moving Forward

These growing pains underscore a critical reality: the complexity of human society—and the diversity of our moral frameworks—cannot be captured by data alone. Just as children grow by encountering perspectives beyond their immediate family, AI agents are going to mature by navigating unfamiliar scenarios and sometimes clashing values. In this sense, we are all contributors to the “social environment” in which AI develops, whether we’re end users, developers, or policy-makers.

Ultimately, the future of AI isn’t just about scaling up what these systems can do. It’s about how they learn to reconcile competing values, navigate nuanced ethical dilemmas, and communicate their reasoning in ways humans find understandable.

Like shaping a child’s worldview, instilling robust, adaptable principles in AI is an ongoing responsibility—one that demands creativity, vigilance, and a willingness to learn from the very systems we set out to teach.

How might we design AI feedback loops so that every user interaction serves as a constructive form of guidance rather than a source of confusion? Is our opportunity in establishing community-led or regulatory guardrails to ensure they don’t inherit—or amplify—dangerous biases?

Moving forward, it’s vital to consider how we collectively “parent” the next generation of AI.